Projects

- ArchiSpace: Space architecture in analogue environments

- Robotic Terrain Mapping

- Discretized and Circular Design

- CV- and HRI-supported Planting

- Lunar Architecture and Infrastructure

- Robotic Drawing

- Rhizome 2.0: Scaling-up of Rhizome 1.0

- Rietveld Chair Reinvented

- Cyber-physical Furniture

- Rhizome 1.0: Rhizomatic off-Earth Habitat

- D2RP for Bio-Cyber-Physical Planetoids

- Circular Wood for the Neighborhood

- Computer Vision and Human-Robot Interaction for D2RA

- Cyber-physical Architecture

- Hybrid Componentiality

- 100 Years Bauhaus Pavilion

- Variable Stiffness

- Scalable Porosity

- Robotically Driven Construction of Buildings

- Kite-powered Design-to-Robotic-Production

- Robotic(s in) Architecture

- F2F Continuum and E-Archidoct

- Space-Customizer

Computer Vision and Human-Robot Interaction for D2RA

Year: 2018-2022

Project leader: Henriette Bier

Project team: Henriette Bier, Sina Mostafavi, Yu-Chou Chiang, Arwin Hidding, Vera Laszlo, Amir Amani and MSc students from BK TUD and DIA; Seyran Khademi and Casper van Engelenburg from BK and EWI; Mariana Popescu from CiTG; Luka Peternel and Micah Prendergast, from 3ME.

Collaborators / Partners: DIA, Library TU Delft, Dutch Growth Factory

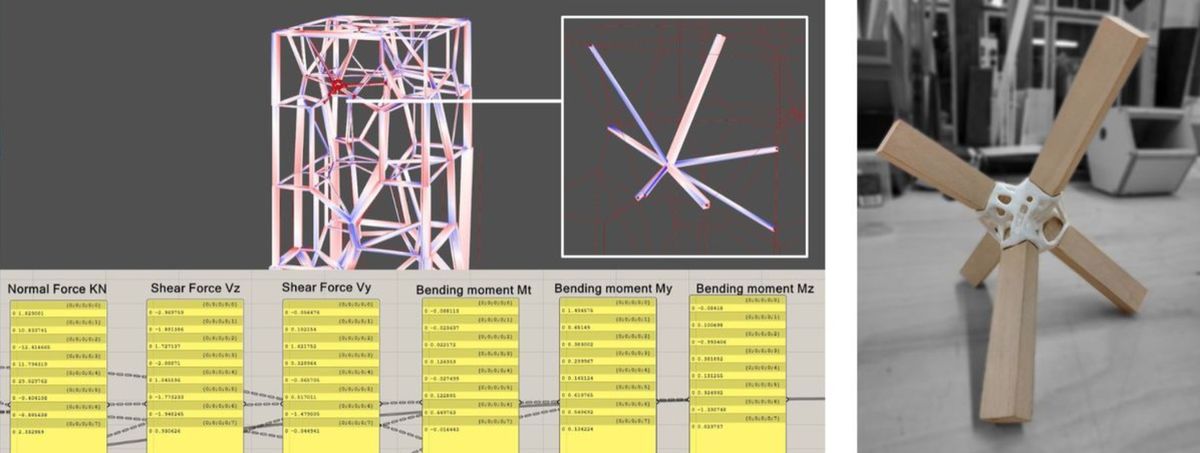

In a first phase, the Design-to-Robotic-Assembly project showcased an integrative approach for stacking architectural elements with varied sizes in multiple directions. Several processes of parametrization, structural analysis, and robotic assembly were algorithmically integrated into a Design-to-Robotic-Production method. This method was informed by the systematic control of density, dimensionality, and directionality of the elements. It was tested by building a one-to-one prototype, involving development and implementation of computational design workflow coupled with robotic kinematic simulation that is enabling the materialization of a multidirectional and multidimensional assembly system. The assembly was human assisted.

In a second phase, human-robot interaction is being developed. The robotic arm using ROS, ML, and computer vision techniques, such as OpenCV and DNN will be developed, to find location of nodes, detect the related linear elements, pick them with a gripper and transfer them to the intended location (in the next proximity of the node) one by one, while considering obstacle avoidance (human safety). Finally, the human will navigate the arm with his/her hand, and in order to move it to its final location (placed in the node). For that the following steps are considered: (1) Localization by creating a map of the environment (including nodes, linear elements, and human location); (2) Robot's location by object detections (using OpenCV and ROS to detect the correct node, and do the corresponding action); (3) Controlling and navigating the gripping toward the objects in order to pick up the objects; (4) Human action involving controlling the gripper manually to insert the linear element in the node.

The object detection has been implemented using the GUI provided by the ROS package for object recognition, in which objects can be 'marked' and saved for future detection. The detector will identify the objects in camera images and publish the details of the object through a topic. Using a 3D sensor, it can estimate the depth and orientation of the object.

Another approach has been implemented in a project involving reconfigurable furniture using the Yolo object detection model, which was trained on the created dataset to predict the object’s id number for the Voronoi cell with acceptable accuracy and confidence. Randomly placed cells that are detected in real time using the trained object detection model. The probability of the selected id numbers is shown in the right text box next to the object bounding box. Given this output and the known map of the furniture constellation, the user is instructed to put back the missing object to where it belongs.