Projects

Cyber-physical Furniture

Year: 2021-2023

Project leader: Henriette Bier

Project team: Henriette Bier, Arwin Hidding, Max Latour, Vera Laszlo and MSc students (RB lab), Seyran Ka Seyran Khademi and Casper van Engelenburg (AiDAPT lab), Mariana Popescu (CiTG), Luka Peternel and Micah Prendergast, (3ME)

Collaborators / Partners: UniFri (Hamed Alavi and Denis Lalanne), Tokencube (Klaus Starnberger), 3D Robot Printing/Dutch Growth Factory (Arwin Hidding), TU Library (Vincent Celluci)

Funding: 35K

Dissemination: CR 22, MAB23

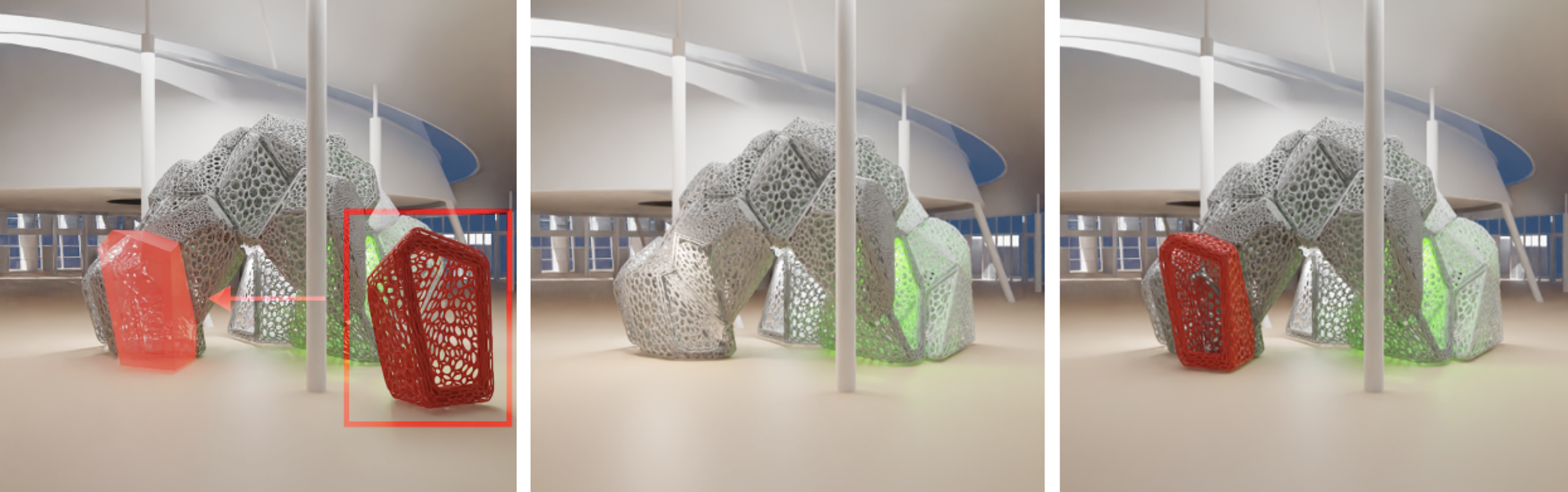

Cyber-physical Furniture relies on computational design for robotically producing and operating indoor and outdoor furniture. The design takes functional, structural, material, and operational aspects into account. It integrates sensor-actuators into the furniture enabling communication with users via sounds, lights, etc. and web-based apps, respectively. The furniture components range in size and functionality from pavilions, info or food booths, to benches and stools. They are designed with structural, functional, environmental, and assembly considerations in mind. At the micro-scale, the material is displaying degrees of porosity, where the degree and distribution of porosity i.e. density are informed by functional, structural and environmental requirements, while taking into consideration both passive (structural strength, physical comfort, etc.) and active behaviours (interaction, etc.). At the meso-scale, the component is informed mainly by the assembly logic, while at the macro scale, the assembly is informed by architectural considerations.

The furniture with integrated sensor-actuators may reconfigure as in the Pop-up Apartment, may integrate plants and sensor-actuators as in the BCP Planetoids and may be to some degree flexible as in the VS Chaise longue.

Materials considered are recycled wood, recyclable thermoplastic elastomers and biopolymers based on wood. Smart operation is implemented by integrating sensor-actuators such as light dependent resistors, infrared distance sensor, pressure sensor, etc. informing building components, lights, speakers, ventilators, etc. in order to allow users to customise operation and use of the furniture. Furthermore, these sensor-actuator mechanisms are monitored online as for instance:

- Temp and humidity are measured and when one or the other is too low or too high notifications are made to an app; lights, sounds, as means of communication are considered as well;

- Locations of interactive furniture are shown on a map and the movement of visitors is tracked;

- Users can reconfigure furniture, interact with it (as for instance, water and/or sunshade it), and communicate via an online app.

Reconfiguration may be implemented using object and placement recognition, which is a well-known computer vision task with available model architectures. The objects i.e. building components are labeled with id numbers that are the ‘keys’ to the correct placement within the larger building components constellation. The project addresses the question of how robotics are integrated in user-driven building processes and the environment in order to make production efficient and utilisation smart. The distributed sensor-actuators are conceived as intelligent networked components, locally driven by people’s preferences and environmental conditions.